In this post, we will talk about K-Nearest Neighbors Classifier in short K-NN Classifier. The K-Nearest Neighbors algorithm widely used for classification models, though you can use it for regression as well. If you are looking to get into machine learning classification world, this is the best and the easiest model to start along with linear regression of course.

This post will explain how K-Nearest Neighbors Classifier works. In this article, we will cover below-listed points,

- What is K-Nearest Neighbors Classifier?

- How does K-Nearest Neighbors Classifier work?

- We will build a model using K-Nearest Neighbors Classifier.

Classification problem and Problem definition

What is a classification problem? As the name suggests and in the simplest term, a classification problem used when we have to divide the set of records(data) into several parts.

Let’s take the example of a clothing company, this company has built a Suit and launched into a market. The company is trying to find out the age group of the customers based on the sales of the suits, for the better marketing campaign. So that company can target only those customers who belong to that age group.

Let’s assume the company has all the orders of the customers in CSV file and they hired you to solve this problem. The company is asking which age group is most likely to buy this suit? No clue!

Well, we will create a model to solve this problem in this post and we will understand how we can use the KNN Classifier algorithm in this situation.

What is K-Nearest Neighbors Classifier and How it works?

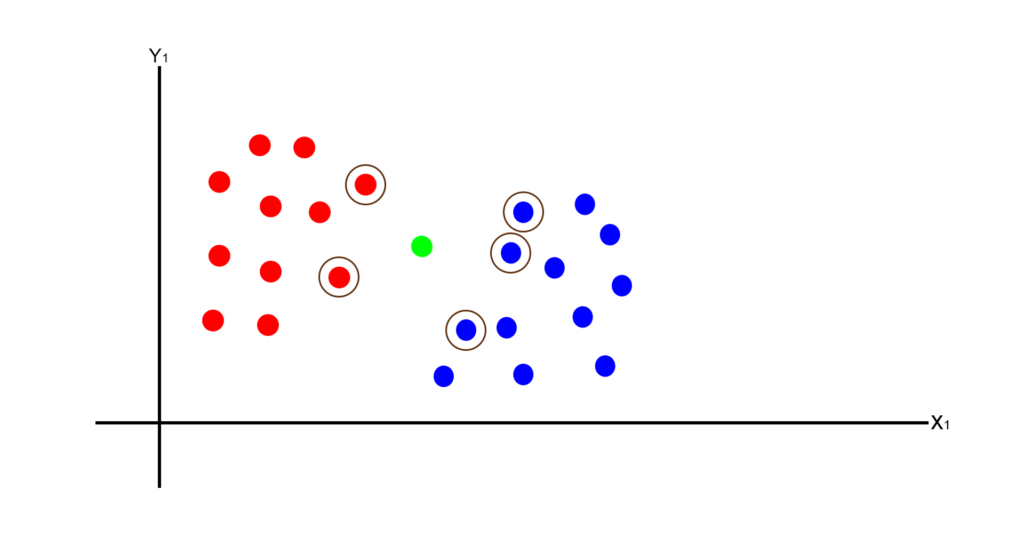

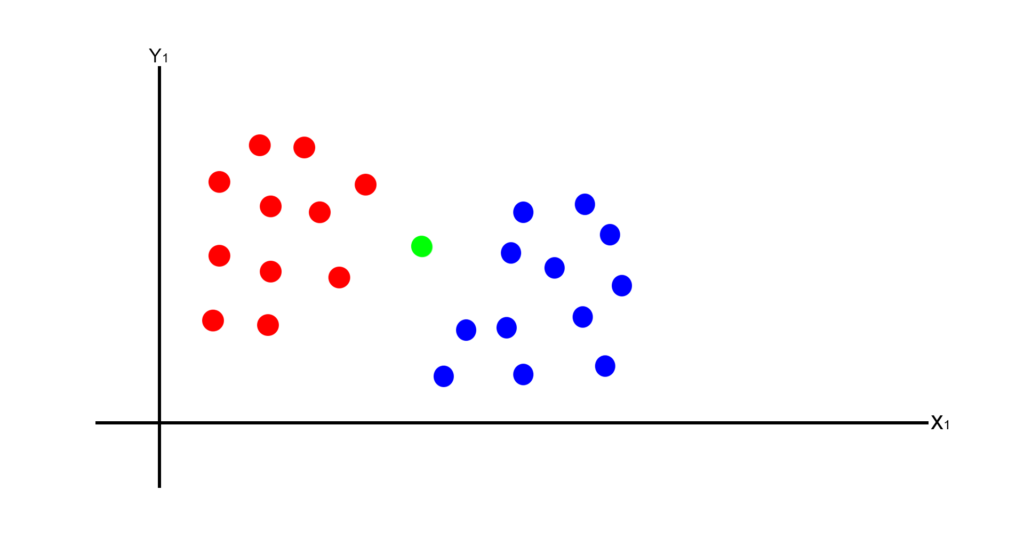

K-Nearest Neighbors Classifier algorithm is a supervised machine learning classification algorithm. The K-Nearest Neighbors Classifier algorithm divides data into several categories based on the several features or attributes. As you can see in the below graph we have two datasets i.e. Red dataset and Blue dataset.

Now let’s say we have a new incoming Green data point and we want to classify if this new data point belongs to Red dataset or Blue dataset.

Now to classify this point, we will apply K-Nearest Neighbors Classifier algorithm on this dataset. To apply K-Nearest Neighbors Classifier algorithm we have to follow below steps,

Now to classify this point, we will apply K-Nearest Neighbors Classifier algorithm on this dataset. To apply K-Nearest Neighbors Classifier algorithm we have to follow below steps,

- The first step is, select the neighbors around new data point. Let’s say we have selected 5 neighbors around new data point, i.e K=5.

- Select nearest neighbors using Euclidean distance around new data point as shown the below graph.

- Count the number of data point in each both the categories.

- Based on the majority of the data points, you can put the new data point into the respective category.

Preparing the data for training

Now we are aware how K-Nearest Neighbors Classifier works. The next step is to prepare the data for the Machine learning Naive Bayes Classifier algorithm. Preparing the data set is an essential and critical step in the construction of the machine learning model.

To predict the accurate results, the data should be extremely accurate. Then only your model will be useful while predicting results. In our case, the data is completely inaccurate and just for demonstration purpose only. In fact, I wrote Python script to create CSV. This CSV has records of users as shown below,

You can get the script to CSV with the source code.

K-Nearest Neighbors Classifier Machine learning algorithm with an example

=>To import the file that we created in the above step, we will usepandas python library. To implement the K-Nearest Neighbors Classifier model we will use thescikit-learn library.

=>Now let’s create a model to predict if the user is gonna buy the suit or not. The first step to construct a model is to create import the required libraries.

=>Create fileknn_supermall.pyand write down the below code.

knn_supermall.py:

# -*- coding: utf-8 -*- """ K-Nearest Neighbors Classifier Machine learning algorithm with example @author: SHASHANK """ # Importing the libraries import pandas as pd from sklearn.preprocessing import StandardScaler from sklearn.neighbors import KNeighborsClassifier

=>Now we will create a class calledModelshown below. In this class, we will create three methods.

knn_supermall.py:

# -*- coding: utf-8 -*-

"""

K-Nearest Neighbors Classifier Machine learning algorithm with example

@author: SHASHANK

"""

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

# Applying feature scaling on the train data

def doFatureScaling(self):

def isBuying(self):

# we will call importData(), in order to import the test data.

self.importData()

# We will call doFatureScaling() for scaling the values in our dataset

self.doFatureScaling()

=>Now let’s import the data set in ourmodelclass. Under theimportData()method add the below code as shown below,

knn_supermall.py:

# -*- coding: utf-8 -*-

"""

K-Nearest Neighbors Classifier Machine learning algorithm with example

@author: SHASHANK

"""

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

dataset = pd.read_csv('supermall.csv')

self.X = dataset.iloc[:, [2,3]].values

self.Y = dataset.iloc[:, 4].values

# Applying feature scaling on the train data

def doFatureScaling(self):

def isBuying(self):

# we will call importData(), in order to import the test data.

self.importData()

# We will call doFatureScaling() for scaling the values in our dataset

self.doFatureScaling()

=>The next step of the creating a model is to add feature scaling on our data set. We will usescikit-learn libraryfor feature scaling. We have already imported a library for it. Let’s use that library to do the feature scaling.

knn_supermall.py:

# -*- coding: utf-8 -*-

"""

K-Nearest Neighbors Classifier Machine learning algorithm with example

@author: SHASHANK

"""

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

dataset = pd.read_csv('supermall.csv')

self.X = dataset.iloc[:, [2,3]].values

self.Y = dataset.iloc[:, 4].values

# Applying feature scaling on the train data

def doFatureScaling(self):

self.standardScaler = StandardScaler()

self.X = self.standardScaler.fit_transform(self.X)

Explanation:

- In our dataset, we have huge numeric values for the salary field. Feature scaling will normalize our huge numeric values into small numeric values.

- Let’s say if we have billions of records in our dataset. If we train our model without applying Feature scaling, then the machine will take time too much time to train the model.

- In our code first, we will create an object of

StandardScalerand then we willfit_transform()method on our data.

=>Let’s add the code underisBuying()method. In this method, we will add code to fit the train data that we have already have. Also, we will take input from the user and based on that input our model will predict the results. So in the end, your model should look like this:

knn_supermall.py:

# -*- coding: utf-8 -*-

"""

K-Nearest Neighbors Classifier Machine learning algorithm with example

@author: SHASHANK

"""

# Importing the libraries

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.neighbors import KNeighborsClassifier

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

dataset = pd.read_csv('supermall.csv')

self.X = dataset.iloc[:, [2,3]].values

self.Y = dataset.iloc[:, 4].values

# Applying feature scaling on the train data

def doFatureScaling(self):

self.standardScaler = StandardScaler()

self.X = self.standardScaler.fit_transform(self.X)

def isBuying(self):

self.importData()

self.doFatureScaling()

# Fitting Naive Bayes algorithm to the Training set

classifier = KNeighborsClassifier(n_neighbors = 5, metric = 'minkowski', p = 2)

classifier.fit(self.X, self.Y)

userAge = float(raw_input("Enter the user's age? "))

userSalary = float(raw_input("What is the salary of user? "))

# Applying feature scaling on the test data

testData = self.standardScaler.transform([[userAge, userSalary]])

prediction = classifier.predict(testData)

print 'This user is most likely to buy the product' if prediction[0] == 1 else 'This user is not gonna buy the your product.'

model = Model()

model.isBuying()

Explanation:InisBuying()method,

- We will call

importData()anddoFatureScaling()methods. - Then we are fitting our dataset to the K-Nearest Neighbors Classifier algorithm by using

KNeighborsClassifierlibrary. KNeighborsClassifierclass we will pass three parameters- The first parameter is a number of neighbors, in this case, it is 5.

- The second and third parameters are metric = Minkowski and p = 2 to calculate the Euclidean distance.

- Then using python we are asking for inputs from the user as a Test data.

- After receiving inputs from the user, we will apply feature scaling on the inputs.

- Lastly, we are predicting the values using

classifier.predict()method.

Executing the Model

Now your model is complete and ready to predict the result. To execute the model we will call theisBuying()method of the class model as shown below,

# -*- coding: utf-8 -*- """ K-Nearest Neighbors Classifier Machine learning algorithm with example @author: SHASHANK """ model = Model() model.isBuying()

Conclusion

For now, that’s it for K-Nearest Neighbors Machine Machine learning algorithm. I hope you understood the K-Nearest Neighbors Machine algorithm and this algorithm is very easy to implement. K-Nearest Neighbors can be used for classification and regression both. Due to low calculation power and High predictive power, it’s very popular in the machine learning world.

If you like this article share it on your social media and spread a word about it. Till then, happy machine learning.