Today we will talk about one of the most popular and used classification algorithm in machine leaning branch. In the previous post, we talked about the Support Vector Machine algorithm which is good for small datasets, but when it comes to classifying large datasets one should use none other than Naive Bayes Classifier algorithm. In this post, we will discover all about the Naive Bayes Classifier algorithm, why this algorithm is called as Navie Bayes, and yeah one more thing we won’t talk about apple and oranges if you know what I mean! We will also discuss where and when to use this algorithm. In this article, we will cover below-listed points,

- What is Naive Bayes Classifier?

- How does Naive Bayes Classifier work?

- We will build a model using Naive Bayes Classifier.

Classification problem and Problem definition

What is a classification problem? As the name suggests and in the simplest term, a classification problem used when we have to divide the set of records(data) into several parts.

Let’s take the example of a clothing company, this company has built a Suit and launched into a market. The company is trying to find out the age group of the customers based on the sales of the suits, for the better marketing campaign. So that company can target only those customers who belong to that age group.

Let’s assume the company has all the orders of the customers in CSV file and they hired you to solve this problem. The company is asking which age group is most likely to buy this suit? No clue!

Well, we will create a model to solve this problem in this post and we will understand how we can use the Naive Bayes Classifier algorithm in this situation.

What is Naive Bayes Classifier?

Naive Bayes Classifier is probabilistic supervised machine learning algorithm. Let’s first understand why this algorithm is called Navie Bayes by breaking it down into two words i.e., Navie and Bayes.

In our Problem definition, we have a various user in our dataset. The features of each user are not related to other user’s feature. First, this algorithm creates probability for each user.

Now with this prior knowledge, this algorithm can predict if the new user(data) in our dataset is going to buy the suit or not, hence the name Navie.

Now, Why Bayes? To calculate the probability it uses the Bayes theorem hence the algorithm is called as Naive Bayes. We will talk more about Bayes theorem down the road.

With the help of this algorithm, you can perform both binary classifications as well as non-binary classifications. It means that your dataset can be divided into more than two classes(categories).

How does Naive Bayes Classifier work?

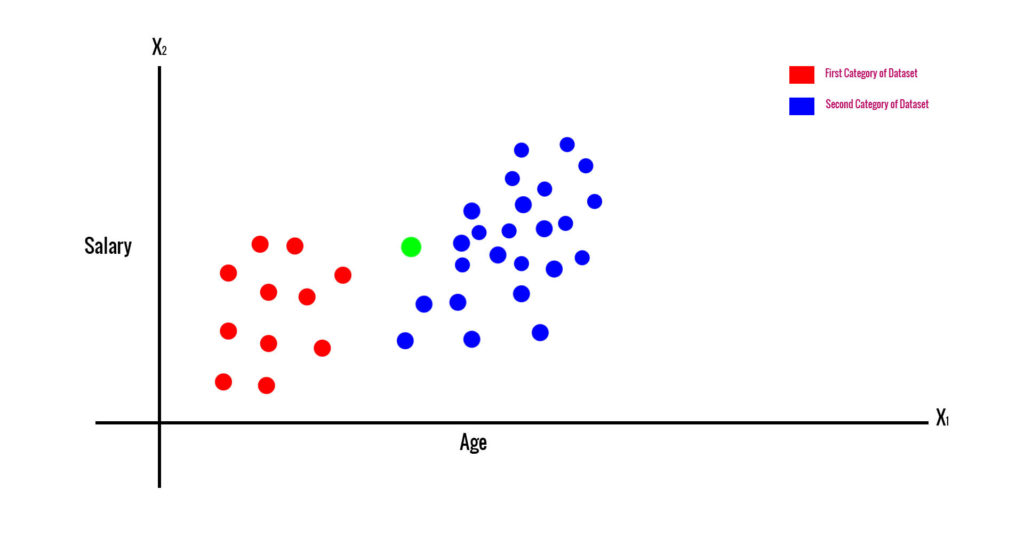

Again we will stick to our problem definition. We have to predict if the new incoming user is going to buy the suit based on the age and salary. In the below Graph we have 50 users having features age and salary.

- So the red dots are the class of users who are not going the suit

- Blue dots are the class of users who are going to buy the suit.

- The Green dot is a new user for whom we are going to predict what is the probability of buying the suit!

Here we will apply Bayes theorem on this dataset and we will create two classes(categories) if the user is buying or not buying the suit. Based on the features of the incoming user we can predict if the user is going to buy the suit or not.

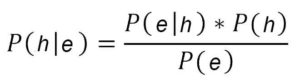

To create two separate classes, first, we have to apply Bayes theorem so let’s do it. Below is the formula for Bayes theorem, where h stands for hypothesis and e stands for evidence.

- P(h|e) means the probability of a hypothesis(h) for evidence(e). It is called the posterior probability.

- P(e|h) is the probability of evidence(e) for the hypothesis(h) was true. It is called the Likelihood.

- P(h) is the probability of ahypothesis(h) was true irrespective of the data. It is called the prior probability of ahypothesis(h).

- P(e) is the probability of evidence(e). This is called the Marginal Likelihood.

To predict the results for Green point, the Naive Bayes algorithm follows three steps.

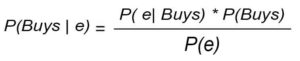

- The first step is to find out the probability users who are going to buy the suits. On applying this theorem, your above formula will look like this,

- The second step is to find out the probability users who are not going to buy the suits.

- Based on the above two probabilities, the algorithm will predict results for new users.

Preparing the data for training

Now we are aware how Naive Bayes Classifier works. The next step is to prepare the data for the Machine learning Naive Bayes Classifier algorithm. Preparing the data set is an essential and critical step in the construction of the machine learning model.

To predict the accurate results, the data should be extremely accurate. Then only your model will be useful while predicting results. In our case, the data is completely inaccurate and just for demonstration purpose only. In fact, I wrote Python script to create CSV. This CSV has records of users as shown below,

You can get the script to CSV with the source code.

Naive Bayes Classifier Machine learning algorithm with example

There are four types of classes are available to build Naive Bayes model using scikit learn library.

Gaussian Naive Bayes: This model assumes that the features are in the dataset is normally distributed.Multinomial Naive Bayes: This Naive Bayes model used for document classification. This model assumes that the features are in the dataset is multinomially distributed.Complement Naive Bayes: This model is useful when we have imbalanced features in our dataset.Bernoulli Naive Bayes: This model is useful when there are more than two or multiple features which are assumed to have binary variables.

In this post, we will create Gaussian Naive Bayes model using GaussianNB class of scikit learn library.

=>To import the file that we created in above step, we will usepandas python library. To implement the Naive Bayes Classifier model we will use thescikit-learn library.

=>Now let’s create a model to predict if the user is gonna buy the suit or not. The first step to construct a model is to create import the required libraries.

=>Create filenaive_bayes_supermall.pyand write down the below code.

naive_bayes_supermall.py:

# -*- coding: utf-8 -*- """ Naive Bayes Classifier Machine learning algorithm with example @author: SHASHANK """ # Importing the libraries import pandas as pd from sklearn.preprocessing import StandardScaler from sklearn.naive_bayes import GaussianNB

=>Now we will create a class called Modelshown below. In this class, we will create three methods.

naive_bayes_supermall.py:

# -*- coding: utf-8 -*-

"""

Naive Bayes Classifier Machine learning algorithm with example

@author: SHASHANK

"""

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

# Applying feature scaling on the train data

def doFatureScaling(self):

def isBuying(self):

# we will call importData(), in order to import the test data.

self.importData()

# We will call doFatureScaling() for scaling the values in our dataset

self.doFatureScaling()=>Now let’s import the data set in ourmodel class. Under theimportData() method add the below code as shown below,

naive_bayes_supermall.py:

# -*- coding: utf-8 -*-

"""

Naive Bayes Classifier Machine learning algorithm with example

@author: SHASHANK

"""

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

dataset = pd.read_csv('supermall.csv')

self.X = dataset.iloc[:, [2,3]].values

self.Y = dataset.iloc[:, 4].values

# Applying feature scaling on the train data

def doFatureScaling(self):

def isBuying(self):

# we will call importData(), in order to import the test data.

self.importData()

# We will call doFatureScaling() for scaling the values in our dataset

self.doFatureScaling()=>The next step of the creating a model is to add feature scaling on our data set. We will usescikit-learn libraryfor feature scaling. We have already imported a library for it. Let’s use that library to do the feature scaling.

naive_bayes_supermall.py:

# -*- coding: utf-8 -*-

"""

Naive Bayes Classifier Machine learning algorithm with example

@author: SHASHANK

"""

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

dataset = pd.read_csv('supermall.csv')

self.X = dataset.iloc[:, [2,3]].values

self.Y = dataset.iloc[:, 4].values

# Applying feature scaling on the train data

def doFatureScaling(self):

self.standardScaler = StandardScaler()

self.X = self.standardScaler.fit_transform(self.X)Explanation:

- In our dataset, we have huge numeric values for the salary field. Feature scaling will normalize our huge numeric values into small numeric values.

- Let’s say if we have billions of records in our dataset. If we train our model without applying Feature scaling, then the machine will take time too much time to train the model.

- In our code first, we will create an object of

StandardScalerand then we willfit_transform()method on our data.

=>Let’s add the code underisBuying()method. In this method, we will add code to fit the train data that we have already have. Also, we will take input from the user and based on that input our model will predict the results. So in the end, your model should look like this:

naive_bayes_supermall.py:

# -*- coding: utf-8 -*-

"""

Naive Bayes Classifier Machine learning algorithm with example

@author: SHASHANK

"""

# Importing the libraries

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.naive_bayes import GaussianNB

class Model:

X = None

Y = None

standardScaler = None

# Importing the dataset

def importData(self):

dataset = pd.read_csv('supermall.csv')

self.X = dataset.iloc[:, [2,3]].values

self.Y = dataset.iloc[:, 4].values

# Applying feature scaling on the train data

def doFatureScaling(self):

self.standardScaler = StandardScaler()

self.X = self.standardScaler.fit_transform(self.X)

def isBuying(self):

self.importData()

self.doFatureScaling()

# Fitting Naive Bayes algorithm to the Training set

classifier = GaussianNB()

classifier.fit(self.X, self.Y)

userAge = float(raw_input("Enter the user's age? "))

userSalary = float(raw_input("What is the salary of user? "))

# Applying feature scaling on the test data

testData = self.standardScaler.transform([[userAge, userSalary]])

prediction = classifier.predict(testData)

print 'This user is most likely to buy the product' if prediction[0] == 1 else 'This user is not gonna buy the your product.'

Explanation:InisBuying()method,

- We will call

importData()anddoFatureScaling()methods. - Then we are fitting our dataset to the Naive Bayes Classifier algorithm by using

GaussianNBlibrary. - Then using python we are asking for inputs from the user as a Test data.

- After receiving inputs from the user, we will apply feature scaling on the inputs.

- Lastly, we are predicting the values using

classifier.predict()method.

Executing the Model

Now your model is complete and ready to predict the result. To execute the model we will call theisBuying()method of the class model as shown below,

# -*- coding: utf-8 -*- """ Support Vector Machine Machine learning algorithm with example @author: SHASHANK """ model = Model() model.isBuying()

Conclusion

This brings us to the end of this post, I hope you enjoyed doing the Naive Bayes classifier as much as I did. If you are working on large datasets than this algorithm will work best, in my personal opinion you should always try this algorithm when you are working classification problems.

In this post, we didn’t cover the mathematical side of this algorithm for the sake of simplicity. So, I will urge you to learn the math behind Bayes theorem from google. By doing so, you will understand how it works and what is the logic behind the algorithm. So, for now, that’s it from my side.

If you like this article share it on your social media and spread a word about it. Till then, happy machine learning.

Very nice explanation even non-technical guys can be understand it is realy appreciatable.

Thank You!